According to Nielsen, using generative AI in business improves users’ performance by 66%.

That’s because the power of AI can:

A lot of business leaders are starting to catch on to these benefits and explore their options. If you’re an IT professional, you’ve probably been thinking more about data security. How can you know this new AI technology will meet your standards?

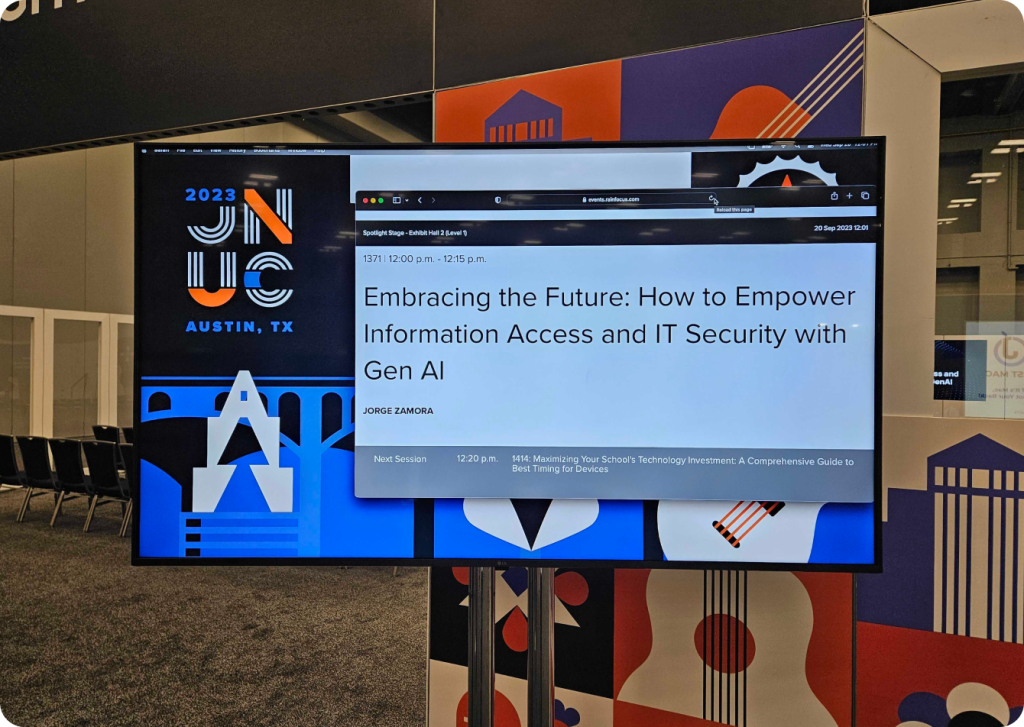

During JNUC 2023, GoLinks CEO Jorge Zamora discussed the main considerations you should be aware of when diving into GenAI. Here are the takeaways from his spotlight session.

The GenAI landscape

In the past year, GenAI has drastically grown in popularity as a tool to help businesses improve workflows. In fact,

- Adobe found that 15% of enterprises are using AI, and 31% said it is on the agenda for the next 12 months.

- TechTarget found that, out of 670 organizations surveyed, the majority will have adopted gen AI within 12 months.

- VentureBeat found that 54.6% of organizations are experimenting with generative AI.

So it’s becoming pretty clear that if you want to keep up in 2023 and beyond, now is the time to get started with AI.

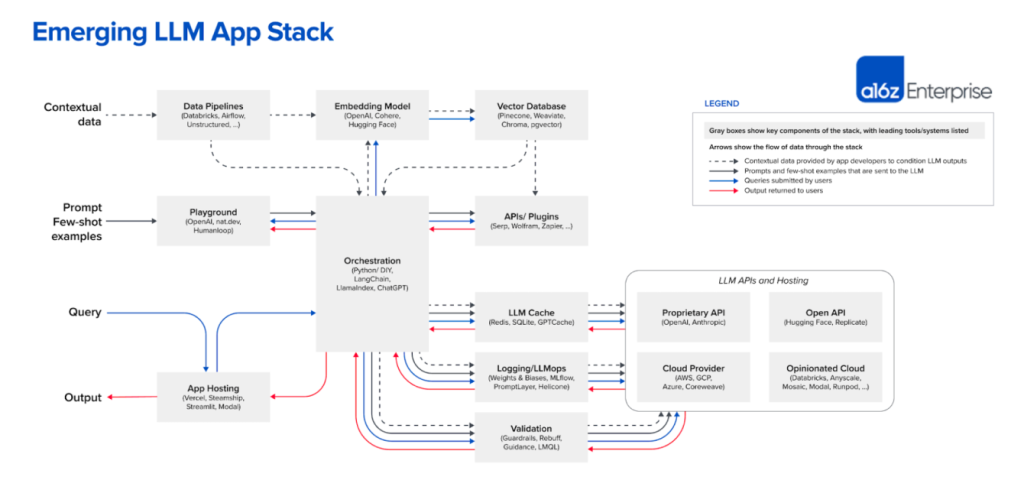

What to consider when it comes to AI

The first thing to understand is that all generative artificial intelligence tools are built using a large language model (LLM). Some major LLMs include Bard by Google, LLama by Meta, and GPT by OpenAI. New LLMs are coming out monthly, like PaLm2, Cohere, and Claude — just to name a few.

For IT professionals who are concerned with security, there are two main things to consider when it comes to LLMs.

- The LLM won’t know anything about your business.

If you use an LLM like ChatGPT-4, the model will only have knowledge up to September 2021. On top of that, it will only have data from things it can access externally. That means it won’t know anything about your internal business.

- The LLM will need to be supplemented with your data.

In order for the LLM to generate the specific information your organization needs, it will need to be fed your private data. You can connect it with things like your knowledge base, people data, and SaaS apps.

What are your AI options?

You could build it yourself

If you’re feeling ambitious, you may consider building a GenAI tool internally.

Pros:

- You have control over your data and can store it on your own systems

- You design the backend orchestration and can fine-tune it to your liking

- You could even build your own internal LLM

Cons:

- Significant expense and expertise is required

- You must manually integrate all your apps (the average company has 130 SaaS apps!)

- You have to build in proper security to avoid the risk of cyber attacks like prompt injection

Having complete control seems like a great bonus, but building and maintaining a secure AI model can be a massive overhead. Is your org ready to commit to something like this?

You could use a vendor

More and more companies are offering different AI applications for organizations to use internally.

Pros:

- You can get started almost instantly

- They have dedicated teams to focus on setup and problem-solving

- They build all the connectors and integrations ANd maintain them

Cons:

- There are security risks — you have to give a third party your private data

- GenAI is typically siloed to that vendor’s product

All in all, a top concern for organizations using a vendor is data privacy and leaking intellectual property.

Don’t forget about the user experience

Whether you consider building or buying, there are key features that you’ll want to create the best experience for the users — your employees.

A holistic GenAI solution includes:

Knowledge Inputs: Language model and semantic context

The foundation of any GenAI solution lies in its ability to understand and process information effectively. A language model is the backbone, enabling the AI system to comprehend human language, including text, speech, and visual content.

Semantic context involves deciphering the meaning and nuances within the language. This contextual awareness enables the AI to respond more intelligently to user queries.

Data Connectors: Integrated apps and knowledge ingestion

A GenAI solution should seamlessly integrate with various applications and data sources. This integration is essential to ensure that the AI can provide valuable insights and perform tasks specific to your organization’s needs.

Knowledge ingestion is another critical aspect. This involves continuously updating the AI’s knowledge base with fresh data from both internal and external sources. By regularly ingesting new information, the GenAI can stay up-to-date with the latest trends, news, and developments, ensuring its responses remain relevant and accurate.

Access Permissions: User and resource-level restrictions

GenAI systems should implement user authentication and authorization mechanisms. This way, only authorized users can access and interact with the AI. User roles and permissions can be defined to specify what actions each user can perform.

Beyond user access, resource-level restrictions define what data and functionalities the AI can access. This is crucial for maintaining the integrity of sensitive data.

User Interface: Available where and how you work

Generative AI should work where you work and how you work. This is another big consideration when bringing this tool into your workplace. Jorge shared, “You really want to focus on the user experience. Can they chat with it? Is there a chatbot? Can they access it from every app? Can they access it on their phone?”

You should be able to use AI within the tools and apps you use daily — in your Slack channel, through chatbots, on your intranet, etc. This way, GenAI is a part of your workflows and doesn’t interrupt your workflows.

Secure AI solutions for faster workflows

To wrap up the spotlight session, Jorge introduced our two GenAI products: GoLinks and GoSearch. Let’s dive into them.

GoLinks: Secure, AI-enhanced info access and sharing

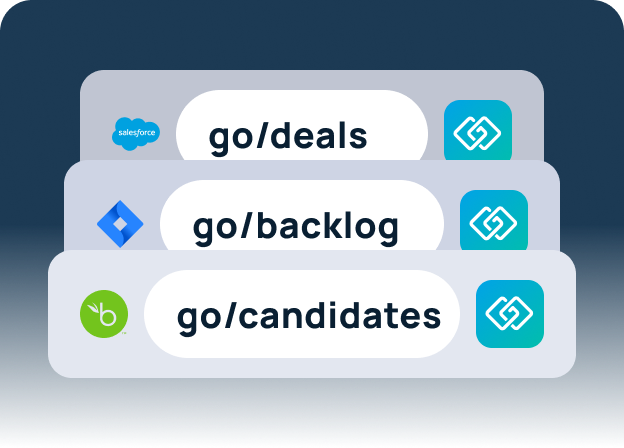

GoLinks is a tool that lets you access and share information with intuitive keyword short links that you enter in your browser.

For example, a long Google Docs link like this:

https://docs.google.com/presentation/d/1yVcZihiShg ❌

Can become a short, memorable link like this:

go/presentation ✅

Your security and legal teams will love GoLinksGPT because the AI surfaces your resources without needing to access your underlying data. How does this work? GoLinksGPT only accesses the name and description of the go links you’ve created. This keeps your private information super secure with no data connectors required.

GoSearch: Secure, AI-enhanced enterprise search

GoSearch is an enterprise search tool that provides a Google-like experience for all your company’s knowledge. You can choose your data connectors and access semantic search enabled by the most minimal access permissions.

Another security benefit of GoSearch is that you can BYOC (bring your own cloud). This keeps all the data on your own servers. You can also BYOLLM (bring your own LLM), so you have ultimate control.

Getting started with secure AI in your workplace

GenAI brings a lot of benefits to the table — especially when you find a tool that perfectly fits your org’s needs. If you’re interested in getting started with GoLinks + GoSearch for ultimate AI-powered knowledge management, we’d love to talk! Click here to schedule a demo.

Access and share resources instantly with GoLinks

Schedule a demo